In a nutshell — or a chipset! — what I propose we do asap is move radically away from the more recent division of power and hierarchies between admins versus users that has shaped #ai and #it ever since the arrival of the Internet, towards the suggested conflation of admin and user in one.

Mil Williams, 3rd July 2023, Stockholm Sweden

Proposal

Would anyone in #scandinavia, more specifically I’d be bound to say #sweden, like to begin work on designing and implementing, from scratch, a totally repurposed set of #ai- and #it-related architectures and frameworks in order to create absolutely future-proof ethical and privacy-positive #ai and #it frameworks?

That is: do for #ai what I have already suggested via the concept of a digital equivalent of the #privacypositive and #secrecypositive attributes pencil and paper have for centuries conferred on us:

• https://www.secrecy.plus/spt-it

The original “intuition validation engine” README on GitHub

In this case, in respect of #ai- and #it-#tech, I would suggest using a starting-point I already clearly described with the original 2019 specification of the #intuitionvalidationengine (i’ve) (currently on my GitHub account in private mode, and reproduced in full below):

intuition-validation-engine

The goal of this engine is to permit both human and machine intuition to be validated.

This will be done constantly, but not intrusively. People and machines will have a choice, always.

It is assumed that for the purposes of this project both parties will be encouraged to upskill the other in mutual dialogue and equal partnership.

It is also assumed, a priori, that the keywords for the processes involved will be:

1. A procedure of CAPTURE, controlled by humans on the one hand and machines on the other, where neither will be obliged to share ideas, content and personal data that they do not feel safe sharing.

2. A procedure of EVIDENCING, where the captured data can be stored, retrieved, shaped and patterned, and used for supportive purposes that expand the lives and experiences of the beings concerned.

3. A procedure of VALIDATION, where it becomes clear to everyone participating: a) why a human being might believe and act in a certain way; and equally so, b) why the machines that prefer to work within the framework of this project will arrive at their own particular positions and conclusions.

Finally, it is hugely important that everyone who chooses to work on the project might easily understand that it is not a traditional software paradigm: let us assume, instead, that people, code, machines and all other objects participating will form part of a new space we might call “i’ve”.

That is to say, there will be no distinction or hierarchy in this space between the individuality of the objects in question, with respect to their entity as sovereign actors. In this sense, all will enjoy becoming part of a multiple-perspective environment, and all will help to support and contribute to a wider and transcendental knowledge that both befits and benefits others.”

So.

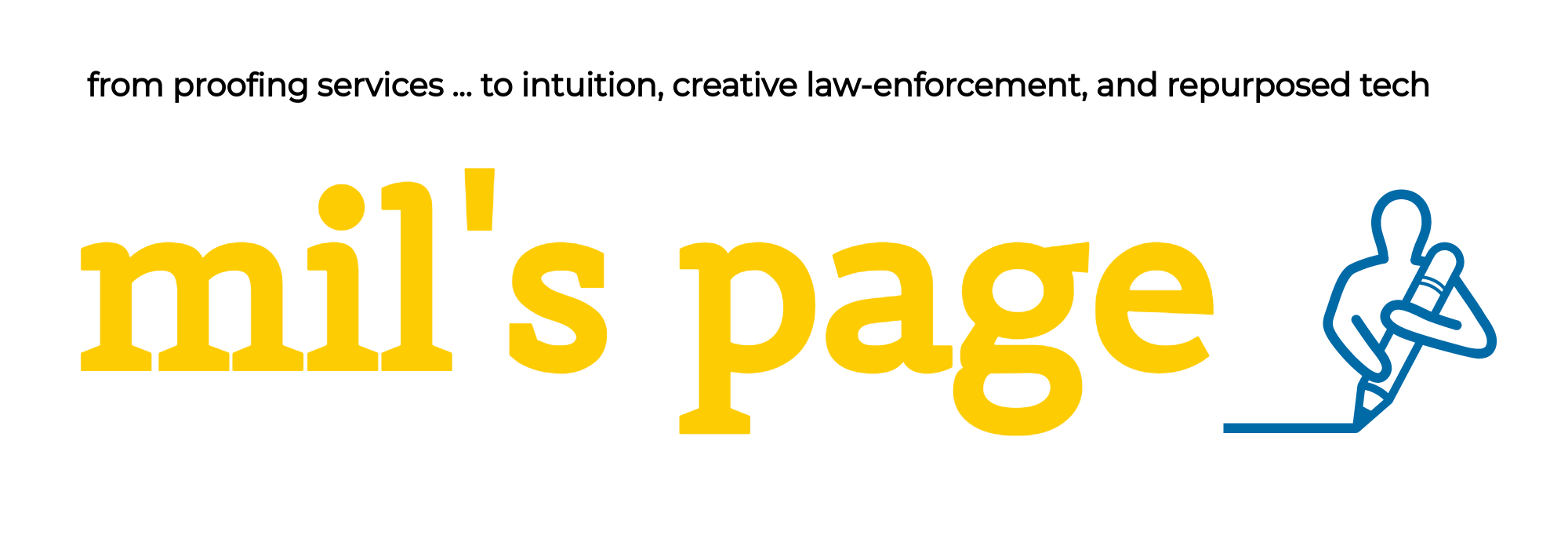

Alongside the clearly developed initial architectural philosophy stated above, i would then have us move on to working with the #platformgenesis progression of the original concept as it existed since 2019:

• https://platformgenesis.com | see also the attached slides

Then, with further collaborative actions, especially in the light of other technologies developed since, we could begin to properly propose an absolutely future-proofed #ai and #it-#tech which, as per their real-world template of pencil and paper, could never NOT become privacy- and ethically-sensitive, whatever the regulatory demands created in the future by any global or regional body.

This would be my objective from two directions: legal and technological; abandoning neither for the other. And making both future #ai and #it-#tech as firmly #ethical and #privacypositive by design as to make regulatory innovations that might challenge it impossible to design.

To summarise

In a nutshell — or a chipset! — what I propose we do asap is move radically away from the more recent division of power and hierarchies between admins versus users that has shaped #ai and #it ever since the arrival of the Internet, towards the suggested conflation of admin and user in one.

The division described has, in my judgement, severely — and increasingly — affected the citizens and workforces who strive to function and live creatively, despite the challenges, in Western corporates and wider societies when needing to think freely. These needs arise in many — if not all — fields of endeavour too, and in most during mission-critical moments and when decisions have to be taken using an unpickable #highleveldomainexpertise (something we sometimes are also happy to call #gutfeeling) which becomes the only thing we may be able to reliably depend on.

The real existential challenge for our democracies and business discourses and praxis then arises when we fail to think as freely as others who, with a clear and ongoing possession and enjoyment of #privacysensitive and #secrecysensitive architectures and technologies, maintain their capacity to beat us hands-down, at least on the #intuition side of societal and business activities:

• https://crimehunch.com/terror | concentrate here on considering which team would be best at a new “what and how” (I’m happy, meanwhile, to recognise that pattern-recognition capabilities in machines will inevitably process vast amounts of data better when focussing on more concrete questions of “who and when”)

• https://www.secrecy.plus/why

• https://omiwan.com/the-foundations

Finally …

If you want to find out more about my latest ideas, why not go to the #sweden located and focussed online whitepaper I’ve been using to further my thought around complementary strands of complex thinking?