three good things happened today: all related to how i perceive the world.

1. first, i do have a death wish: why, when i first read him, hemingway sooo immediately clicked with me.

2. however, i don’t want to be unreasonable or hurtful to others in my goal to achieve this outcome. i also most definitely don’t want support to ameliorate it. amelioration is the biggest wool-over-the-eyes of our western democratic time. i don’t want to be part of a process that perpetuates its cruelties.

3. my strategy — that is, only strategy — will from now on be as follows: i shall say and write about everything that i judge needs to be called out, in such a way that the powerful i will be bringing to book day after day after day will, one day, only have the alternative to literally shoot me down.

in order, then, to make effective the above, i resolve:

a) to solve the problem of my personal debt, acquired mainly due to my startup activities, so the only way in the future that the powerful shall be able to shoot me down is by literally killing me.

for my mistake all along was to sign up to the startup ecosystem, as it stands, as a tool for achieving my personal and professional financial independence:

• startuphunch.com (being my final attempt at making startup human)

as this personal debt is causing me much mental distress and, equally, is clearly a weakness i show to an outside world i now aim to comprehensively and fully deconstruct, as a massive first step, then, i do need to deal with it properly.

b) once a) is resolved, i shall proceed to attack ALL power wherever it most STEALTHILY resides.

that is, i focus on this kind of power: the stealthiest and most cunning versions of.

the ones where it appears we are having favours done for us, for example.

specifically, that is, big tech. but many many others, too.

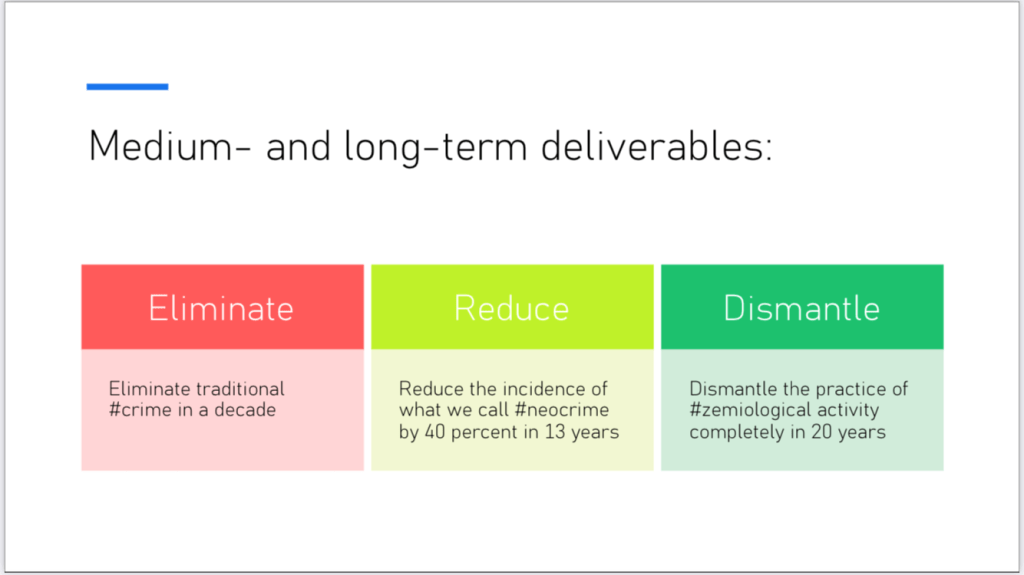

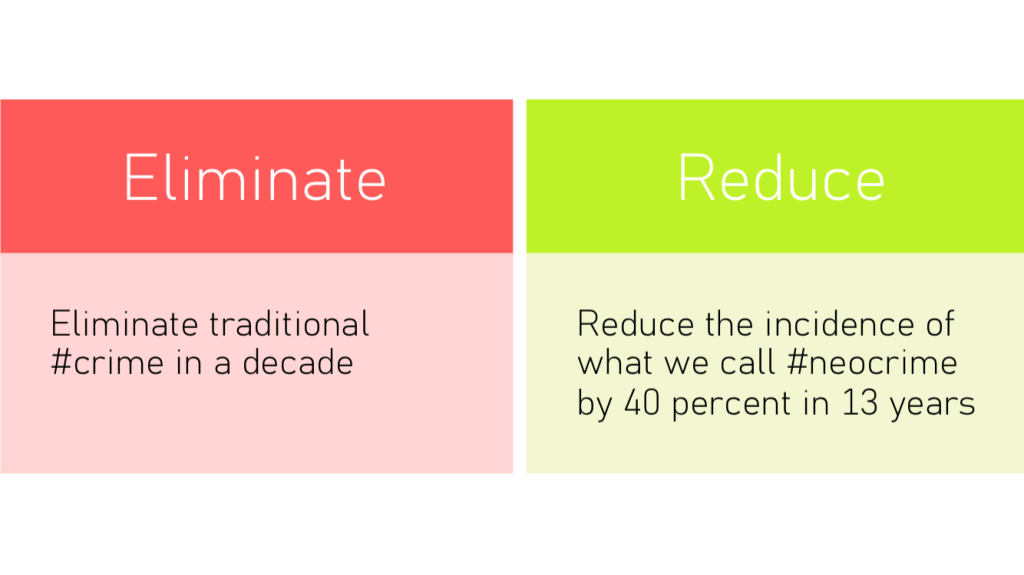

what essentially constitutes the driving forces behind zemiology, loopholes, neo-crimes, and similar legally accepted but criminally immoral societal harm; all of which, as a general rule, is most difficult right now to track, trace, investigate and prosecute.

• www.secrecy.plus/law | legalallways.com

this is why i have concluded that my natural place of work is investigative journalism. and where i want to specialise — in this aforementioned sector and field of endeavour — is in the matter of how big tech has destroyed our humanity. but not as any collateral, accidental, or side effect of a principle way of being it may legitimately manifest.

no.

purposefully; deliberately; in a deeply designed way, too … to mainly screw those clients and customers whose societies and tax bases it so voraciously and entirely dismantles.

to screw, and — equally! — control. and then dispose of lightly and casually, when no longer needed, or beneficial to bottom lines various.

and so as a result of all this, i see that having a death wish is beneficial: if channelled properly, as from today i now intend it shall be, then it will make me fearless as never i dared to be. fearless in thought and disposition. fearless even when made fun of.

not in order to take unreasonable risks with my life — or anyone else’s: no.

rather, to know that life doesn’t exist when the things i see clearly are allowed to, equally clearly, continue.

and to want deeply, deeper than ever in my life, to enable a different kind of life for everyone.

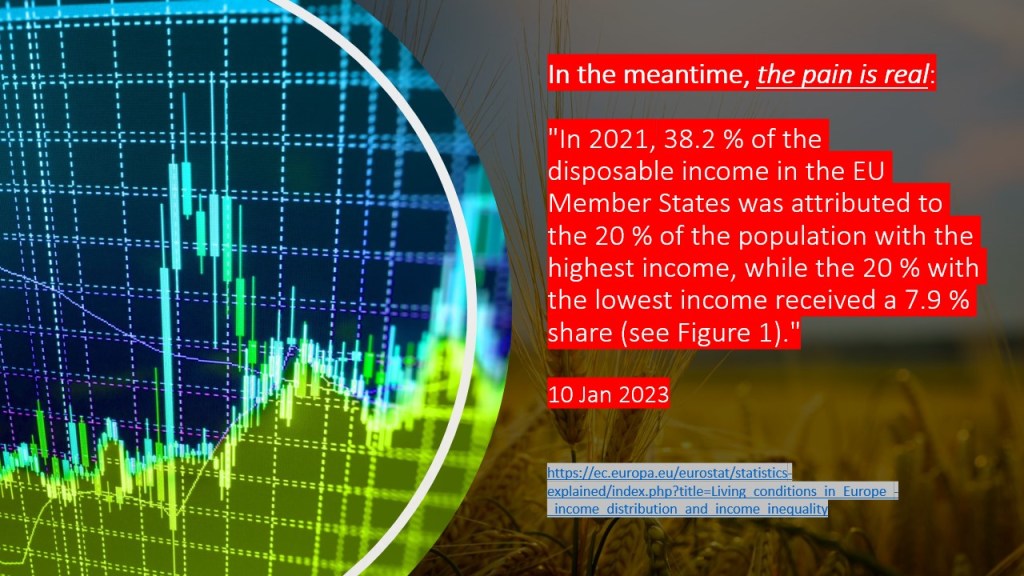

NOT just for the self-selected few. those who lead politics, business and the acts of pillage and rape in modern society.

not just for them.

a better life for everyone, i say. everyone.

because i don’t care about mine. i care that mine should make yours fine.

now do you see? this is what makes me feel useful. nothing else. nothing else at all. and certainly not finding personal happiness. that would only blunt the tool.

🙂