introduction:

this post contains thoughts from a fortnight’s thinking processes more or less; plus the content of a synthesising presentation which is the sum of years of thought-experimenting on my part. i’ll start with the presentation, which is now where i want us to go:

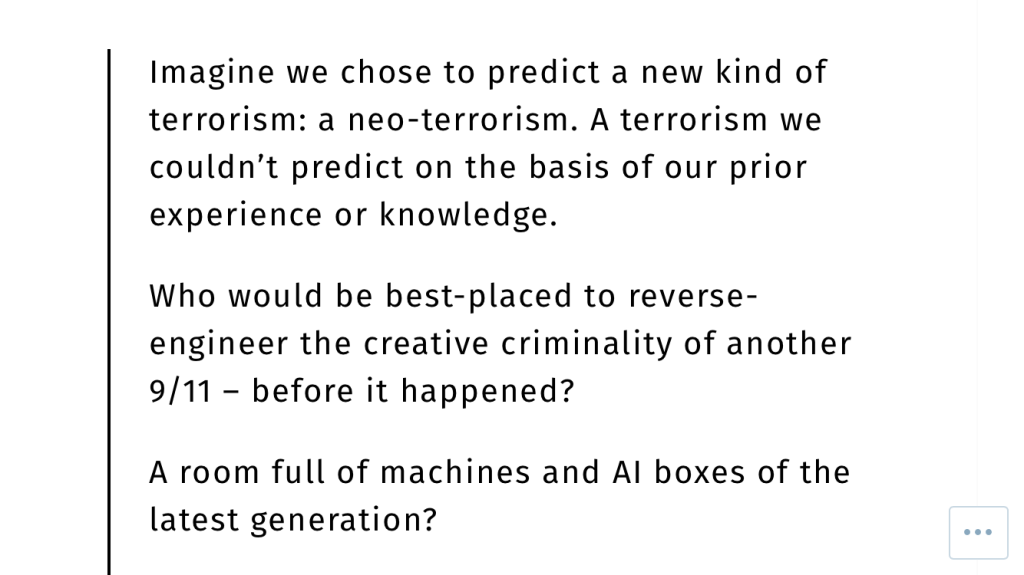

fighting creatively criminal fire with a newly creative crimefighting

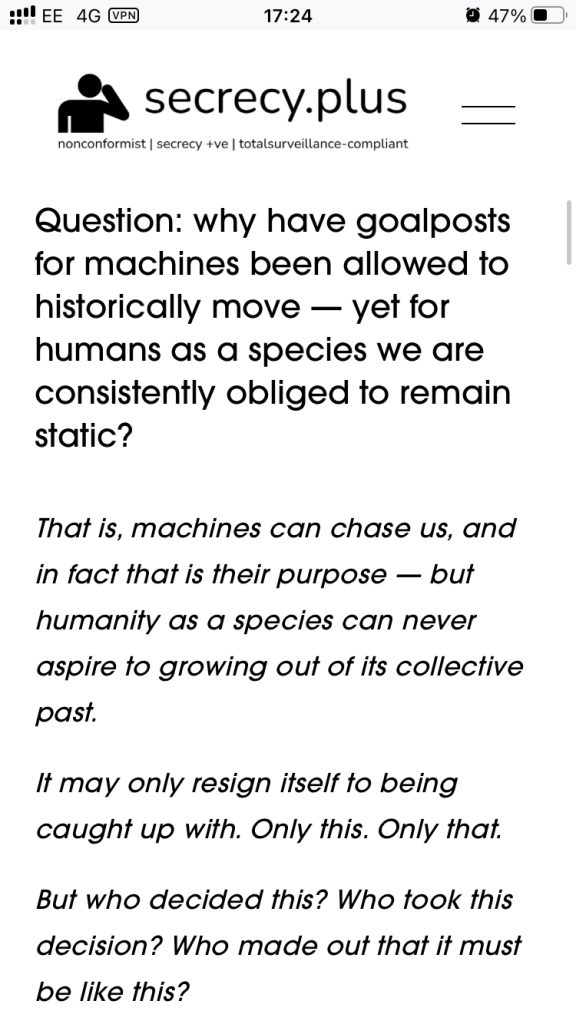

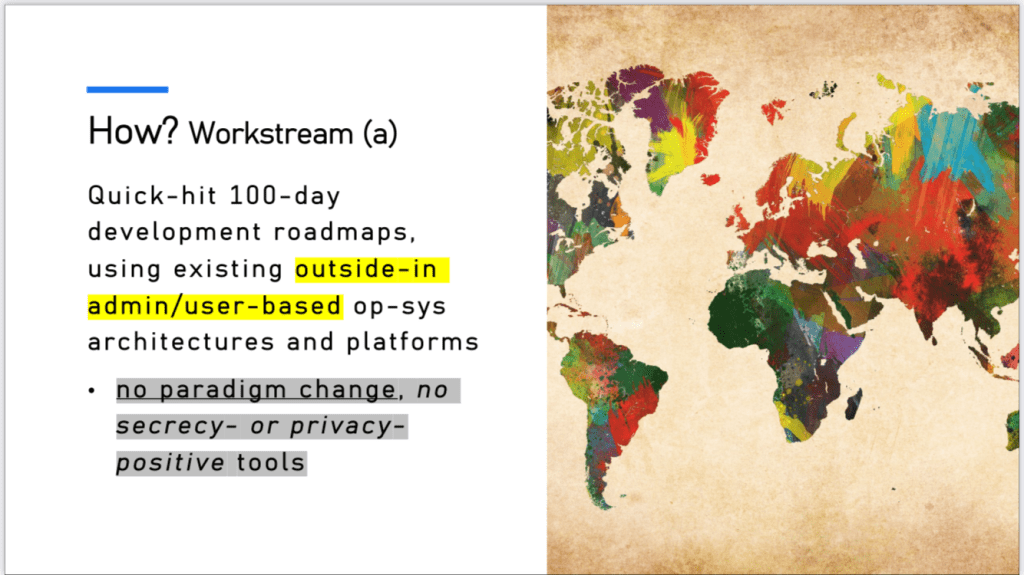

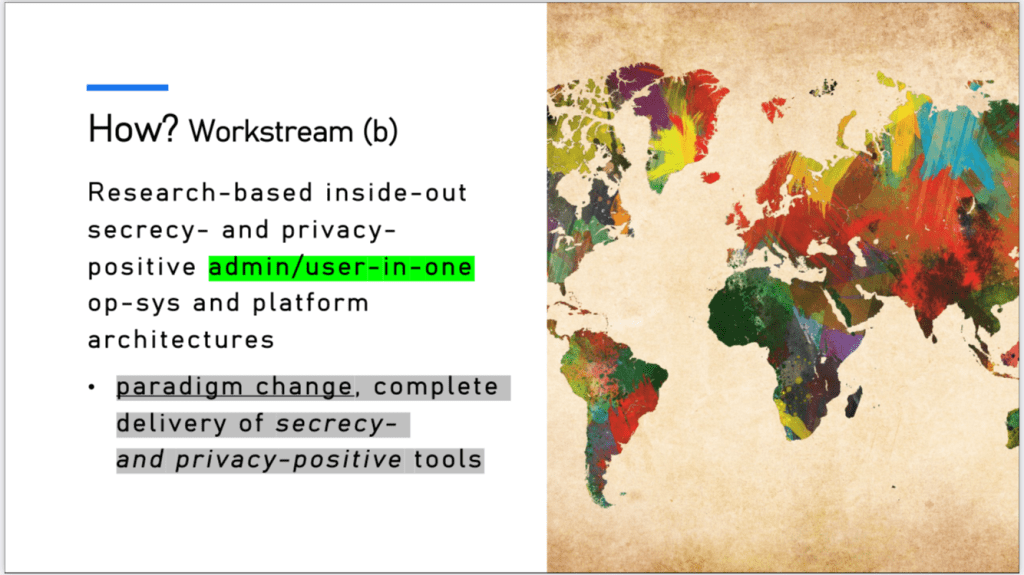

i created the slide below for a presentation i was asked to submit to a european digital agency pitching process, by the uk organisation public. the submission didn’t prosper. the slide, however, is very very good:

the easy answer is that obviously it benefits an industry. the challenging question is why this has been allowed to perpetuate itself as a reality. because real people and democratic citizens have surely perished as a result: maybe unnecessarily.

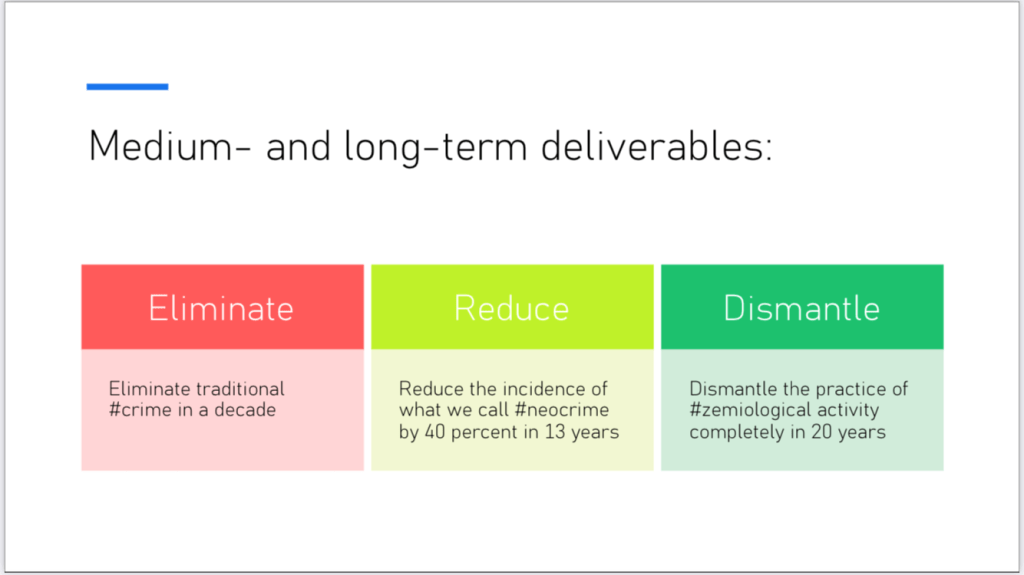

here is the presentation which public failed to accept for submission to the european digital process last october 2022, and from which the above slide is taken:

• presentation submitted to public in october 2022 (pdf download)

where and how i now want us to come together and proceed to deliver on creative crimefighting and global security

the second presentation which follows below indicates my thinking today: no caveats; no red lines; no markers in the sand any more. if you can agree to engage with the process indicated here, no conditions on my side any more.

well. maybe just one. only western allies interested in saving democracy will participate, and benefit both societally and financially from what i’m now proposing:

• www.secrecy.plus/fire | full pdf download

following on from the above then, thoughts i wrote down today — in edited format to just be now relevant only to the above — on my iphone notes app. this constitutes a regular go-to tool for my thought-experimenting:

on creating a bespoke procurement process for healthy intuition-validation development

step 1

pilot a bespoke procurement process we use for the next year.

we keep in mind the recent phd i’ve had partial access to on the lessons of how such process is gamed everywhere.

we set up structures to get it right from the start.

no off-the-peg sold as bespoke and at a premium, even when still only repurposed tech for the moment.

step 2

we share this procurement process speedily with other members of the inner intuition-validation core.

they use it: no choice.

but no choice then gives a quid quo pro: this means total freedom to then develop and contribute freely to the inner core ip in ways that most fit others’ cultures.

and also, looking ahead, to onward commercialise in the future in their zones of influence where they know what’s what, and exactly what will work.

and so then, a clear common interest and target: one we all know and agree on.

mil williams, 8th april 2023

historical thought and positions from late march 2023

finally, an earlier brainstorming from the same process as described in part two above, conducted back in late march of this year. this is now a historical document and position, and is included to provide a rigorous audit trail of why free thinking is so important to foment, trust and believe in, and actively encourage.

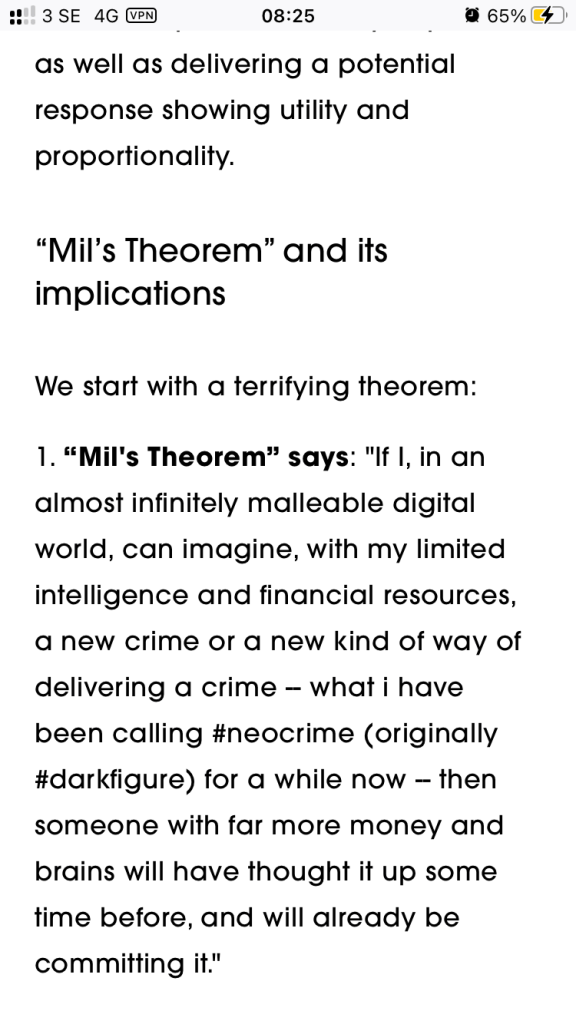

we have to create an outcome which means we know we think unthinkable things far worse than any criminal ever will be able to, to prevent them. we need a clear set of ground rules, but these rules shouldn’t prevent the agents from thinking comfortably (as far as this is the right word) things they never dared to approach.

the problem isn’t putin or team jorge. it is, but not what we see. it’s what they and others do that we don’t even sense. it’s the people who do worse and events that hurt even more … these things which we have no idea about.

if you like, yes, the persian proverb: the unknown unknowns. i want to make them visible. all of them. the what and how. that’s my focus.

trad tech discovers the who and when. but my tech discovers the what and how before even a glint in criminals’ eyes.

so we combine both types of tech in one process that doesn’t require each culture to work with the other. side-by-side, yes. but in the same way, no. so we guarantee for each the purest state each needs of each.

my work and my life/love if you prefer will not only be located in sweden but driven from here too. that’s my commitment. and not reluctantly in any way whatsoever.

[…]

i have always needed to gather enough data. now i have, the decision surely is simple.

mil williams, 21st march 2023