introduction:

maybe #ai can do a few things humans are paid to do. but that doesn’t mean what we’re paid to do by businesses everywhere consists of what our real creativity as unpredictable humans is being exhibited — or even widely fomented.

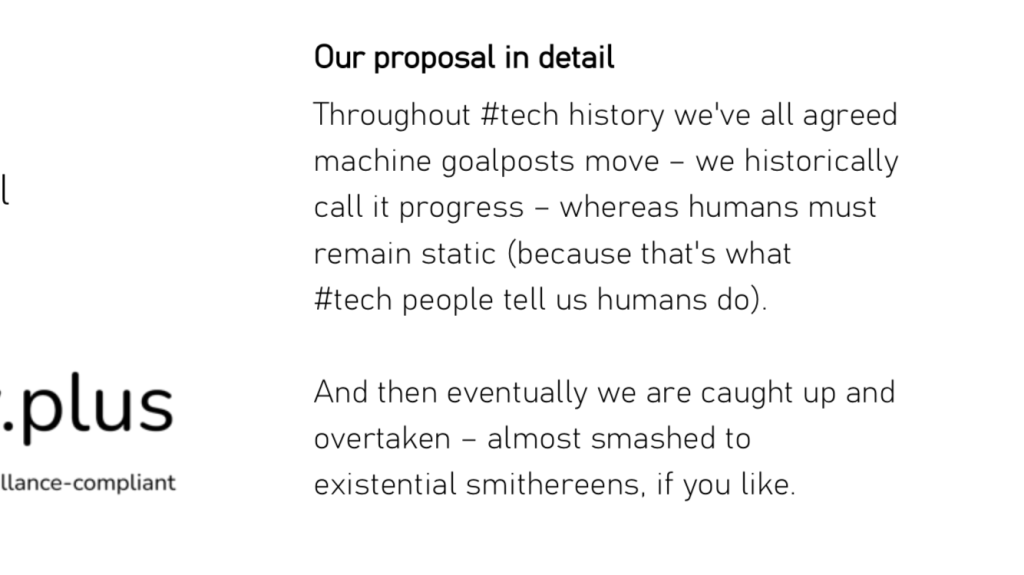

the proposition:

maybe #it-#tech’s architectures have for so long forced us — as the humans we are — into undervaluing, underplaying and underusing our properly creative sides, that what #ai’s proponents determine are human creative capabilities are actually the dumbed-down instincts and impulses of what would otherwise be sincerely creative manifestations of human thinking: that is, where given the architectures i suggest we make more widely available — for example, just to start with, a decent return to a secrecy-positive digital form of pencil & paper so we DON’T consistently inhibit real creativity — and therefore encourage a return to our much more creatively childlike states of undeniably out-of-the-box enquiry …

augmentedintuition.com | a historical whitepaper advocating an augmented human intuition

in this sense, then, the real lessons of recent #gpt-x are quite different: not how great #ai is now delivering, but how fundamentally toxic to human creativity the privacy- and secrecy-destroying direction of ALL #it-#tech over the years has become. because this very same #tech did start out in its early days as hugely secrecy- and privacy-sensitive. one computer station; one hard-drive; no physical connections between yours and mine: digital pencil & paper indeed!

it’s only since we started laying down cables and access points for some, WITHOUT amending the radically inhibiting architecture of all-seeing admins overlording minimally-privileged user, that this state of affairs has come about: an #it-mediated and supremely marshalled & controlled human creativity.

no wonder #ai appears so often to be creative. our own human creativity has become winged fatally by #tech, to the extent that the god which is now erected as #ai has begun to make us entirely in HIS image, NOT extend and enhance our own intrinsic and otherwise innate preferences.

summary:

its not, therefore, that #it-#tech has been making #ai more human: it’s that the people who run #bigtech have been choosing to shape humans out of their most essential humanity.

and so as humans who are increasingly less so, we become prostrate-ducks for their business pleasures and goals.

an alternative? #secrecypositive, yet #totalsurveillance-compliant software and hardware architectures: back, then, to recreating the creativity-expanding, enhancing and upskilling tools that a digital pencil & paper used to deliver:

secrecy.plus/spt-it | a return to a secrecy- and privacy-positive “digital pencil & paper”

a final thought:

in a sense, even from #yahoo and #google #search onwards, both the #internet and the #web were soon designed (it’s always a choice, this thing we call change: always inevitable, true, it’s a fact … but the “how” — its nature — is never inevitable) … so from #search onwards, it all — in hindsight — become an inspectorial, scraping set of tools to inhibit all human creative conditions absolutely.

the rationale? well, the rationale being that #bigmoney needed consumers who thought they were creators, not creators who would create distributed and uncontrollable networks of creation under the radar.

and then with the advent of newer #ai tools, which serve primarily to deliver on the all-too-human capability to bullshit convincingly, #it and related are finally, openly, brazenly, shamelessly being turned on all human beings who don’t own the means of production.

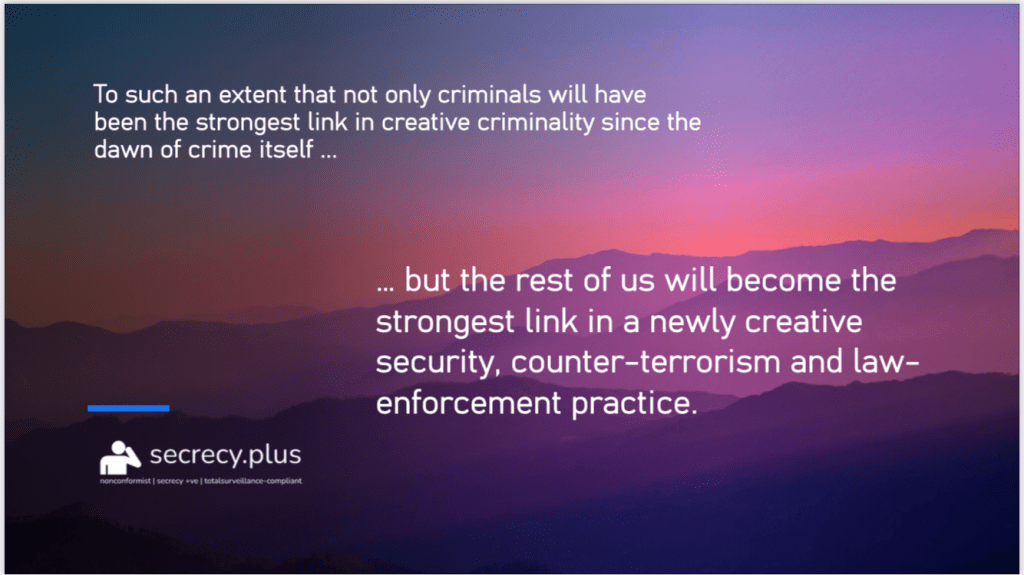

we were given the keys to the kingdom, only to discover it was a #panopticon we would never escape from. because instead of becoming the guards, that is to say the watchers, we discovered — too late — we were forcefully assigned the roles of the watched:

thephilosopher.space | #NOTthepanopticon

and so not owning the means of production, with its currently hugely toxic concentrations of wealth and riches, means that 99.9 percent of us are increasingly zoned out of the minimum conditions a real human creativity needs to even begin to want to function in a duly creative manner at all.

that is to say, imho, practically everything we see in corporate workplaces which claims the tag of creativity is simple repurposing of the existing. no wonder the advocates of #ai are able to gleefully proclaim their offspring’s capabilities to act as substitutes of such “achievements”.

wouldn’t you with all that money at stake?

secrecy.plus/hmagi | #hmagi