Introduction to this post:

Today I had a brief video-chat with someone positively predisposed to the idea of #intuition. He even saw it as bordering the mystical. He was Indian. Indians love #intuition, it’s true. But #it-#tech Indians have caveats they all seem to share. This is something I have seen before: real deep trust in human #intuition’s capabilities but a real distrust in any chance of ever validating it usefully.

This man is also involved professionally in #it-#tech. When I gave him four examples of how not all #tech had chosen to diminish human beings in the field of non-traditional #datasets, he was still unconvinced.

The four templates we should look to when validating #intuition:

Example 1: the #film-#tech industry from its beginnings over a hundred years ago has decided to almost always amplify and enhance existent human abilities: more voice with a microphone; keener vision with a camera; greater expressiveness with the language of close-up. And in so doing it’s made billions, perhaps trillions, in the paradigmatic century of its total cultural dominance.

Example 2: in my younger years video was not admissible evidence in the #criminaljustice system of my homeland. Now it is. What changed to put in the hands of #lawenforcement and #justice’s stakeholders and subjects this tool to eliminate procedural waste so dramatically? We didn’t change any #justice system: we just introduced new tools to validate video evidence, so that the hidden knife in the real life holdup was proven to have been used via a validated electronic cousin.

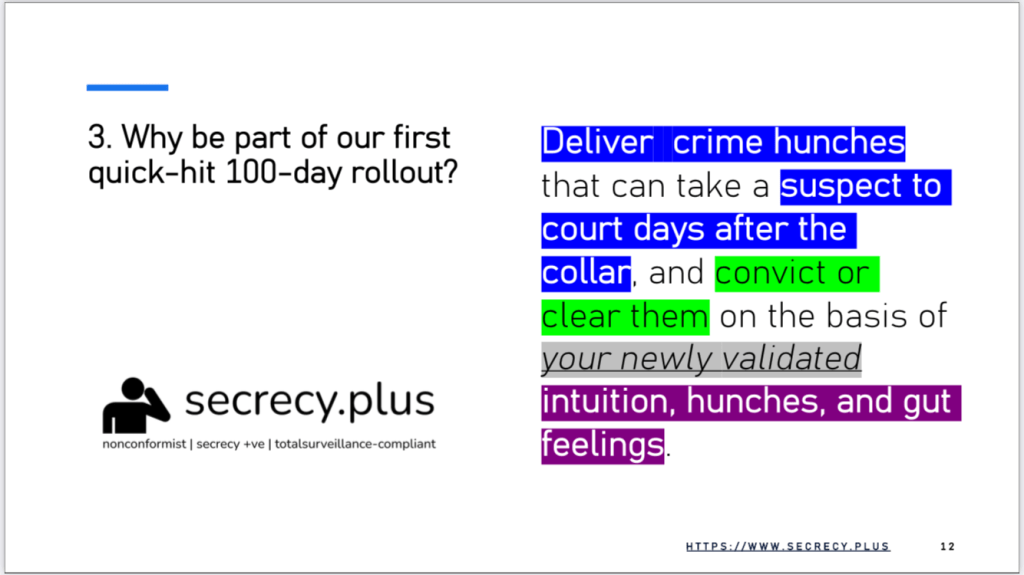

Example 3: the detective who just knows that someone is lying in an interrogation may be wrong too, on occasions; but often they all too accurate. Yet it then takes due process months, maybe years, to arrive at the same conclusion. What if we could validate — not prove right but decide definitively (as the #video example above now allows us to much more speedily) whether in truth MAYBE wrong but ALSO maybe right — so that this detective’s #hunch would bring about a conviction (or release) of the most adequate?

Example 4: I then suggested to my interlocutor that we should come up with a new 9/11 before it strikes us again. Here, I suggest we learn how to reverse- or forward-engineer bad human thought, so as to stop it in its tracks, with the most #creativecrimefighting you could conceive of:

But not the “when” or “who” of what is already being planned out: in these cases, machine automation operates really competently on the basis of existent #lawenforcement and #nationalsecurity #it-#tech data-gathering processes …

Rather, I mean to say here the “what” and “how” of an awfully #creativecriminality. And I say this because 9/11 was a case of where assiduous machines which humans used conscientiously, and in all good faith, were roundly beaten by horrible humans who used machines as extensions of themselves terrifyingly well: being the case, therefore, of simply not supporting existent habits of #creativecrimefighting (because detectives can be immensely creative already in tussling out narratives that explain otherwise insoluble crimes) with conventional #it-#tech choices and strategies that absolutely do NOT since time immemorial care to foreground and upskill human #intuition.

What happened next and, maybe, why:

When I said to my interlocutor that these four examples surely served as robust precedents and templates for proceeding to validate #intuition and #crimehunch insights just as deeply, as well as to an equally efficient end … well, this was when he veered back to talking again of #intuition’s impenetrable workings. “Yeah,” he was saying, “intuiting is great process … but don’t dare to untangle it.”

And it’s funny how those who work in an industry — that is, #it-#tech — where the richest of its members are incredibly wealthy on the back of their particular and often mostly privately privileged visions of how the future must become … well, that these wealthy individuals then, and similarly equally, find themselves incapable of conceding that such a profoundly value-adding activity for them should have its own wider validation systems for us all. Why? Well. In order that EVERYONE who could care to might acquire a distributed delivery of similar levels of genius-like thinking: what I have in fact called the “predictable delivery of unpredictable thinking”.

How I would, then, most like us to proceed:

I’d like us to create software, wearables, firmware and hardware environments where not only a select few can enjoy being geniuses, but where we all have the opportunity to be upskilled and enhanced into becoming value-adding, natively intuition-based thinkers and creators:

• complexifylab.com | sverige2.earth/canvas

One small and hugely practical example:

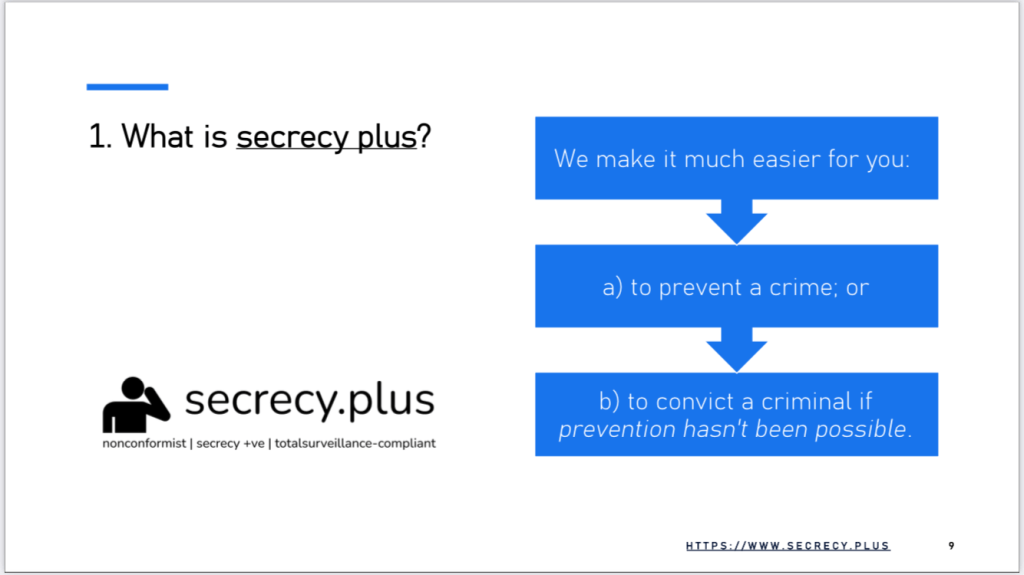

Attached below, just one small application we might develop, using existent architectures — not the particular ones I think more appropriate for truly deep #intuitionvalidation, where we conflate admin/user in one #datasubject — and with a proposed 100-day roadmap to demonstrate that the beautiful insight I had more than a year ago is actually, honestly, spot-on:

1. That #intuition, #arationality, #highleveldomainexpertise, #thinkingwithoutthinking, and #gutfeeling are potential #datasets as competent as #video suddenly became when we believed finally its validation was a real deliverable.

2. That all the above all-very-human ways of processing special #datasets actually contain zero #emotion and even less of the #emotive when it’s their processes we’re dealing with. And that when they do EXPRESS themselves emotionally it’s out of the utter frustration which the driver and #datasubject of such #intuitive processes suffers from as a consequence of the fact that no one at all, but NO ONE, in #it-#tech cares to consider #intuition and related as #datasets worthy of their software and platform attentions.

So out of frustration I say .. but never the intrinsic nature of such #intuitive patterns of collecting #data and extracting insights which people like that detective I described earlier do believe sincerely in, when driving the most mission-critical operations of #publicsafety of all.